Neural Network – Connecting Mid-or-Far Infrared Image

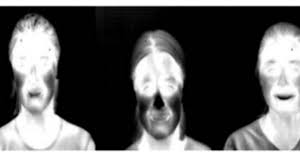

Cross modal matching of the face between thermal and visible range is a desired capability especially during night time scrutiny as well as security applications. Owing to huge modality gap, thermal to visible recognition of the face seems to be one of the challenging face matching issue.Recently Saquib Sarfraz and Rainer Stiefelhagen at Karlsruhe Institute of Technology in Germany has worked out for the first time, a way in connecting a mid-or far-infrared image of a face with a visible light counterpart, a trick they have achieved in teaching a neural network to do all the task. Corresponding to an infrared image of a face to its visible light counterpart is not an easy work, but which deep neural networks are beginning to surface.

The issue with infrared observed videos or infrared CCTV images is that it could be difficult in recognising individuals where the faces tend to look different in the infrared images. Matching of these images to their usual look could be an important uncertain experiment. The issue could be that the connection between the way one may tend to look in infrared and visible light could be very nonlinear. This could be very complicating for footage which could be taken in midand far-infrared that could use passive sensors detecting emitted light instead of the reflected range.

Visible Light Images- High Resolution/Infrared Images – Low Resolution

The way in which a face emits infrared light is completely different from the way it reflects it where the emissions differ as per the temperature of the air as well as that of the skin. This in turn is based on the activity level of the individual, like having a fever or not. Another issue which could make comparison difficult is that visible light images could have a high resolution while far infrared images could have a much lower resolution due to the nature of the camera from which the images have been taken.Collectively, these factors could tend to make it difficult in matching an infrared face with its visible light corresponding image. With the recent developments in deep neural networks in overcoming all types of difficult issues, it gave rise to the idea to Sarfraz and Stiefelhagen. They speculated on training a network to recognize visible light faces by looking at infrared types. Two major factors have been pooled in, recently in making neural networks very powerful.

Better Understanding/Availability of Interpreted Datasets

Better understanding, being the first, on how to build and tweak the networks in the performance of their task which is a procedure leading to the development of the supposed deep neural nets which was something that Sarfraz and Stiefelhagen learnt from other work.The second is the availability of largely interpreted datasets which could be utilised in training these networks.For instance accurate computerized face recognition has been possible due to the creation of massive banks of images wherein people’s faces have been remote as well as identified by observers because of crowdsourcing services like Amazon’s Mechanical Turk. These data sets seem to be much difficult to come by for infrared or visible light evaluations.

Nevertheless, Sarfrax and Stiefelhagenhandled this issue. It was created at the University of Notre Dame comprising of 4,585 images of 82 individuals which were taken either in visible light at a resolution of 1600 x 1200 pixels or in the far infrared at 312 x 239 pixels.The data is said to comprise of images of individuals, laughing, smiling together with neutral expressions taken in various sessions in order to capture the way their appearance seem to change from day to day and in two various lighting conditions.

Fast/Capable of Running in Real Time

Each image was then divided into sets of overlapping patches of 20 x 20 pixels in size in order to vividly increase the size of the database. Eventually Sarfraz and Stiefelhagen utilised the images of the first 41 individuals in training their neural net together with the images of the other 41 people for the purpose of testing. The outcome of it seemed to be interesting.Sarfraz and Stiefelhagen have commented saying that `the presented approach improves the state-of-the art by more than 10 percent. It is said that the net can now match a thermal image to its noticeable counterpart in a mere 35 milliseconds. They further added that `this is very fast as well as capable of running in real time at ∼ 28 fps’. Though it is by no means flawless, at best its precision is over 80 percent when it has anextensivearray of visible images when compared against the thermal image.

The one-to-one contrast accuracy is only 55 percent. Improved accuracy could be possible with larger datasets together with much more powerful network, out of which, the creation of a data set that is higher by order of magnitude would be the more difficult of the two jobs.

However, it is not an issue to imagine this type of database to be created rather quickly provided the interested individuals could be the military, law enforcement agencies and government who tend to have deeper pockets with regards to security related technology.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.