Biologically Inspired Soft Robots

George Whitesides towards the start of the decade had assisted in rewriting the rules of what a machine could be with the improvement of biologically inspired soft robots and is now ready to rewrite it once again with the support of some plastic drinking straws. Whitesides together with Alex Nemiroski a former postdoctoral fellow in

Harvard lab of Whitesides had been encouraged by arthropod insects and spiders and have developed a kind of semi-soft robot which is capable of standing and walking.

The team has also developed a robotic water strider with the skill of pushing itself along the liquid surface. The robots have been defined in a recent paper published in the journal Soft

Robotics. The new robots unlike the earlier generations of soft robots that could stand and walk uncomfortably by filling air chambers in their bodies are designed to be extremely quicker.

The researchers are expecting that the robots would finally be utilised in search operations, even though practical applications seems to be far away, in an event of natural calamities or in conflict zones. The Woodford L and Ann A. Flowers University Professor at Harvard, Whitesides stated that if one looks around the world, there are plenty of things like spiders and insects that are very agile.

Flexible Organisms on Planet

They can move rapidly, climb on various items and are capable of doing things which huge hard robot are unable to do due to their weight and form factor. They are among the most flexible organisms on the planet and the question was how we can build something like that.

The answer from Nemiroski was that it came in the form of one’s average drinking straw. He

informed that it had all began from an observation which George had made that polypropylene tubes have an excellent strength-to-weight ratio. This gave rise to developing something which has more structural support than virtuously soft robots tend to have.

That has been the building block and then they got inspiration from arthropods to figure out how to make a joint and how to use the tubes as an exoskeleton. After that there was a question of how far one’s imagination can go and once you have a Lego brick, what type of castle can one build with it. He added that what they built was a surprisingly simple joint.

Whitesides, with Nemiroski had started by cutting a notch in the straws enabling them to bend. The scientists then inserted short lengths of tubing which on inflation forced the joints to spread. A rubber tendon linked on either side then caused the joint to retract when the tubing flattened.

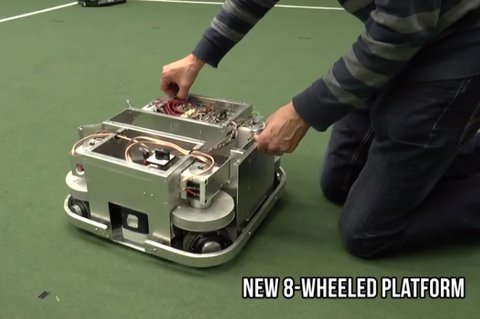

Microcontroller Run By Arduino

The team equipped with the simple concept, built a one-legged robot capable of crawling and moved up in intricacy as they added a second and later a third leg enabling the robot to stand on its own. Nemiroski stated that with every new level of system complexity they would have to go back to the original joint, making modifications in building it to be capable of exerting more force or to be capable of supporting the weight of larger robots.

Eventually when they graduated to six- or eight- legged arthrobots, enabling them to walk, became a challenge from the point of view of programming. For instance it was viewed at the way ants and spiders sequence the motion of their limbs and then attempted to figure out if the aspects of these motions were applicable to what they were doing or if the need for developing their own kind of walking tailored to these specific kinds of joints.

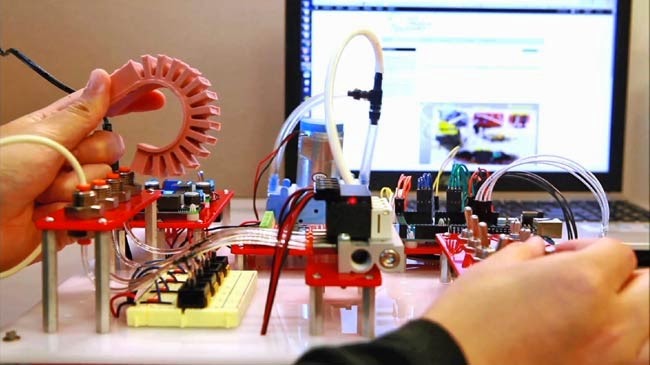

Though Nemiroski together with his colleagues accomplished in directing simple robots by hand, by utilising syringes, they resorted to computers in controlling the sequencing of their limbs since the designs amplified by way of complexity. He informed that they had put together a microcontroller run by Arduino which tends to utilise valve together with a central compressor that enabled them the freedom to evolve their gait swiftly.

Motion of Joint – Binary – Simplicity of Valving System

Although Nemiroski along with his colleagues had been skilful in reproducing the distinctive `triangle’ gait of ants utilising their six-legged robot, imitating a spider-like gait, proved to be far riskier. He added that a spider has the tendency of modulating the speed which it extends and contracts its joints to carefully time which limbs are moving forward and backward at any point.

Nemiroski further added that however in our case, the motion of the joint is binary owing to the simplicity of our valving system. You either switch the valve to the pressure source to inflate the balloon in the joint and extend the limb or switch the valve to atmosphere in order to deflate the joint and thus retract the limb. In the case of the eight-legged robot, the gait compatible had to be developed with binary motion of the joints.

Though it was not a brand new gait but they could not accurately duplicate how a spider tends to move for this robot. Nemiroski stated that developing a scheme which can modify the swiftness of actuation of legs would be a useful objective for future exploration and would need programmable control over the flow rate supplied to each joint.

Academic Prototypes

Whitesides is of the belief that the techniques utilised in their development especially the use of daily off-the-shelf stuff can point the way toward future innovation, though it would take years before the robots make their way in the real world applications.

He stated that he does not see any reason to reinvent wheels and if one looks at drinking straws, they can make them all, effectively at zero cost together with great strength and so why not use them? They are academic prototypes and hence they tend to be very light weight though it would be quite easy to imagine building these with a lightweight operational polymer which could hold a considerable weight.

Nemiroski added that what is really attractive here is the simplicity and this is something George had been championing for some time and something which he grew to appreciate deeply while in his lab.