When people started having modern PCs, they wanted to know how to compile smaller codes quickly. If you have better code optimization, it can decrease the operational cost of big data center applications. You should know that its size is the most dependable factor to phone and embedded systems or software. Ensure that the compiled binary needs to fit in tight code size budgets. You can find headroom squeezed heavily with complicated heuristics, impeding maintenance and improvements.

What is MLGO?

MLGO is a machine learning framework for computer optimization.

Know More About MLGO:

According to a recent research, ML offers more chances for compiler optimization, and it helps to exchange complicated heuristics with ML policies. However, as a compiler, adopting machine learning is a challenge.

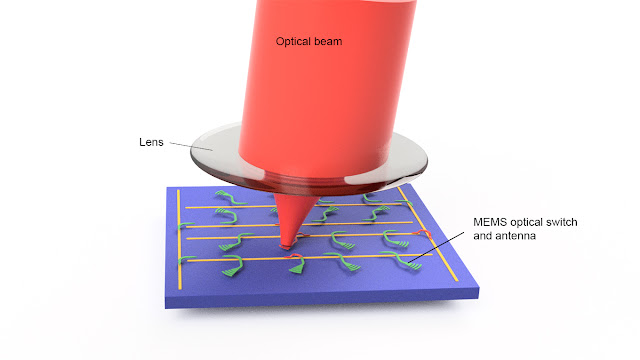

It is why MLGO, a Machine Learning Guided Compiler Optimizations Framework, is here. This one is the first industrial-grade general framework used to integrate ML techniques in LLVM systematically.

In this case, you need to know that LLVM is an open-source industrial compiler infrastructure. You can use it to build mission-critical, high-performance software. Besides, it uses RL to train neural networks. Thus, you can make decisions that can exchange heuristics in LLVM. Reinforcement learning is the full form of RL. You can find here two MLGO optimizations for LLVM. The first one decreases code size with inlining. Besides, the second one helps to improve code performance with register allocation (regalloc). You can get both available in the LLVM repository.

How Does MLGO Work?

Inlining can decrease the code size. It makes decisions that remove the redundant code. We have given here an example. The caller function foo() calls the callee function bar(). It is known as baz().

Inlining both callsites will return a simple foo() function to minimize the code size. You can see many codes calling each other. Thus, these comprise a call graph.

The compiler traverses the graph during the inlining phase. Then, it decides whether it should inline a caller-callee pair or not. This one is a sequential process because earlier inlining decisions will change the call graph. As a result, it will affect the later decisions and the final outcome. The call graph foo() → bar() → baz() requires a "yes" decision on both edges. It helps to decrease the code size.

A heuristic decided inline / no-inline before MLGO. But the more time passes, it becomes hard to improve. The framework substitutes the heuristic with an ML model.

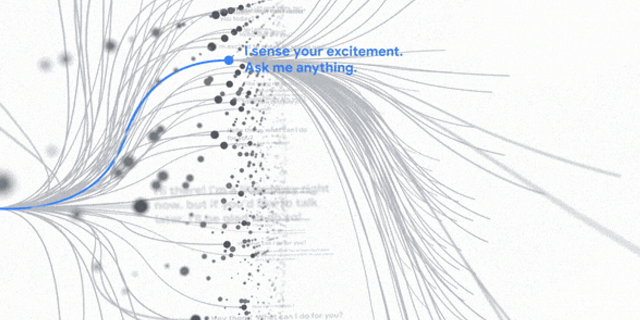

Hence, the compiler seeks advice from a neural network during the traversal of the call graph. It takes the advice to know if it should inline a caller-callee pair by feeding in relevant features from the graph. After that, it will execute them sequentially until the entire call graph is traversed.

The framework trains the decision network with RL. In this case, it uses policy gradient and evolution strategies algorithms . Thus, it can gather information and help to improve the policy. The compiler consults it for inline / no-inline decision-making while inlining. Sequential decision refers to state, action, and reward. When the compilation finishes, it makes a decision. Thereafter, the log is passed to the trainer to update the model. It continues repeating until a satisfactory model appears.

The policy is embedded into the compiler. Thus, it helps to offer inline / no-inline decisions during compilation. Unlike the training scenario, you don't find the policy creating a log. However, you can see the TensorFlow model embedded with XLA AOT. It helps to transfer the model into executable code. Thus, it can avoid TensorFlow runtime dependency and overhead. In this case, it decreases the additional time and memory cost of the ML model.

You can see the policy on a big internal software package with 30k modules. It is generalizable if you apply it to compile other software. Thus, it can achieve a 3% ~ 7% size reduction. Time is also essential for the generalizability across the software.

As the compiler and software are getting developments, the policy must retain good performance for a reasonable time.

Register-Allocation (for performance)

The framework helps to improve the register allocation pass. Thus, it can improve the code performance in LLVM. Register Allocation helps to assign physical registers to live ranges.

When the code executes, different live ranges are finished at different times. Thus, it can free up registers for use. In the instance, you can see every "add" and "multiply" instruction needs all operands. It gets the result in physical registers. It allocates the live range x to the green register. This task completes before live ranges in the blue or yellow registers. When x is completed, you can see the green register. Then, it will assign to live range.

While allocating live range q, you don't find any registers. Therefore, the register allocation pass must decide which one it can evict from its register to create space for q. We know it as the "live range eviction" problem. It is the decision why you should train the model to replace the original heuristics. It helps to evict z from the yellow register and assign it to q and the first half of z.

You can see the unassigned second half of live range z. Now, you can see the eviction of the live range t, and it is split. The first half of t and the final part of z prefer to use the green register. You can see an equation of q = t * y, where z is unavailable. It means z is not assigned to any register. As a result, you can get its value available in the stack from the yellow register. After that, the yellow register gets reloaded to the green register. You can see a similar thing happening to t. It can add additional load instructions to the code and degrades performance. The register allocation algorithm wants to decrease any type of issues. You can use it as a reward to guide RL policy training.

The policy for register allocation gets training on a big Google internal software package. It can experience 0.3% ~1.5% improvements in QPS. The term stands for queries per second.

The bottom line:

MLGO is a framework to integrate ML techniques in LLVM, an industrial compiler. It is a framework you can expand to make it deeper and broader. If you want to make it deeper, you should add more features. Then, you need to apply better RL algorithms. But if you're going to make it broader, you should apply it to more optimization heuristics.