Nvidia's chief played down the challenge from the Google AI chip

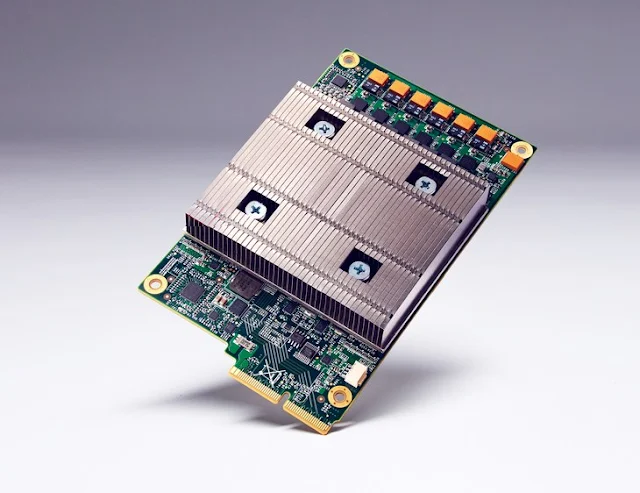

Nvidia has shared many of its forthcoming on the bringing of omnipotent graphics chips used for the wonderful Technology of AI, thus it was not a outstanding day for the organization as Google had famed couple of weeks ago that it had well-stacked its own Artificial Intelligence chip for use in data fractional monetary unit.The profound Google's TPU Technology, has been premeditated generally for deep learning, a division of the AI software Technology train themselves to decode them to make the world finer thus that it can acknowledge things or read the spoken language.

TPUs Technology have been distributed on Google for over a year, for lookup comprehensive and navigation on Google maps. They bring out "a ratio finer carrying into action per watt machine learning" compared to different derivatives, according to Google.

The awful tidings for Nvidia's Technology might be that its spick-and-span Pascal micro architecture is planned with machine learning in mind. Subsequently dropping out of the smartphone industry, the organization with magnificent Technology is sensing for AI development, gaming as well as Vitual Reality.

For the initiative, he said, the Technology of profound learning has two prospects to it - upbringing and illation – as well as GPUs are still overmuch finer on the upbringing, reported by Huang. Grooming Technology affects an algorithmic program with immense magnitude of information to present, devising it finer to acknowledge something while logical thinking is when the rule employs what it has enlightened to an unbeknown input.

"Upbringing is billions more complex that logical thinking," he said, as well as grooming is where Nvidia's GPUs stand out. TPU Technology of Google, but then, is "just for logical thinking," Huang said. A formula upbringing can take months, he said, while ofttimes illation come about in seconds fragmental parts.

In addition to this distinction, he noted that of the many companies who do inference need not have their own processor Technology.

"For organizations that manufacture their own logical thinking chips, that's not a difficulty for us, we re pleased by that," Huang said. "Merely there are millions of knobs in the Hyper-scale data fractional monetary units of organizations that do not manufacture their own TPUs. The best solution we can suggest for them is Pascal."

The Google improved its own chip should not be a ample surprisal. The engineering can be a combative vantage for big online service providers, as well as giants like Google, FB and Microsoft already plan their own servers. Scheming a processor is the succeeding coherent measure, albeit a challenge.

Whether development of the Google's TPU has affected its other chip purchases.

"We're still literally buying tons of CPUs and GPUs," said a Google engineer The Wall Street Journal.

Interim, Nvidias Huang, as some other in the industriousness hoped-for deep learning and AI to get along omnipresent. The last decade were the period of time of the mobile cloud, he said, as well as we are at present in the epoch of artificial intelligence. Companies need to healthier interpret the multitude of data they roll up, and that come about through AI.

No comments:

Post a Comment